Introduction to Pinecone and Its Role in AI

Pinecone is a cutting-edge vector database that has revolutionized the way we approach similarity search and large-scale machine learning. Designed to overcome the traditional challenges of data management in AI workflows, Pinecone offers advanced indexing methods and seamless integration capabilities, making it an indispensable tool for AI engineers and data scientists alike. In this post, we delve deep into harnessing the full potential of Pinecone, showcasing how its architecture and unique features can be leveraged to optimize large-scale AI applications.

As AI systems scale and require increasingly complex computations, the need for efficient data management and rapid query execution becomes paramount. Pinecone’s high-performance querying and adaptive indexing capabilities enable developers to tackle these challenges head-on, ensuring rapid responses even when dealing with vast amounts of vectorized data. In the following sections, we will explore strategies to enhance Pinecone performance, address data synchronization issues, scale your applications effectively, and integrate Pinecone within your existing data ecosystems.

Understanding the Challenges of Large-Scale AI Applications

Large-scale AI applications bring a suite of unique challenges, including managing immense datasets, ensuring low-latency responses, and maintaining synchronization across distributed systems. Common issues include:

- Data Volume and Velocity: As the amount of data increases, ensuring that indexes are updated in real-time without compromising search accuracy is a critical challenge.

- Resource Constraints: Balancing computational resources with storage efficiency requires a robust architecture that dynamically adapts to workload demands.

- Synchronization and Consistency: Integrating data seamlessly from multiple sources while keeping the vector database consistent can be daunting, especially in environments that demand real-time updates.

Pinecone addresses several of these challenges by providing scalable indexing mechanisms and a serverless architecture that decouples storage from compute. https://blog.whoisjsonapi.com/embracing-serverless-computing-a-comprehensive-guide-to-design-architectures-and-best-practices/. This approach not only minimizes cost but also ensures high availability even under heavy loads.

Strategies for Optimizing Pinecone’s Performance

To unlock the true potential of Pinecone, it is essential to consider advanced strategies and techniques. Among them, adaptive indexing stands out as a key enabler of performance optimization. Pinecone utilizes a log-structured indexing approach that is adept at managing both small and expansive datasets.

Adaptive Indexing for Scalability: For smaller datasets, techniques such as scalar quantization or random projections are employed to minimize computational overhead. As your dataset grows, Pinecone seamlessly transitions to more elaborate indexing methods during compaction. For a deep dive into these techniques and their benefits, refer to the post on Optimizing Pinecone for agents (and more).

Additionally, selecting the optimal index type and configuration—whether using HNSW or IVF—and fine-tuning query parameters like similarity metrics, can lead to significant performance improvements. These strategies are essential for ensuring queries are executed quickly, even as data volumes swell.

Data Synchronization Techniques for Pinecone

Efficient data synchronization is a cornerstone for maintaining a state-of-the-art vector database. Pinecone users can leverage synchronization tools such as Airbyte to automate the ingestion and updating of records from diverse sources. Airbyte offers flexible configurations like Destination Default, Custom Namespace, and Source Namespace, ensuring that every record is correctly mapped to the right segment of your index.

This process not only streamlines data management but also enhances the operational efficiency of your AI workflows. Detailed insights into integrating Airbyte with Pinecone are available on Airbyte’s feature page. Additionally, implementing event-driven architectures can further optimize synchronization processes. https://blog.whoisjsonapi.com/implementing-event-driven-architectures-in-go-a-practical-guide/.

Moreover, establishing real-time data pipelines ensures that your database remains current, thereby empowering real-time decision-making. Learn more about the implementation of these real-time updates here.

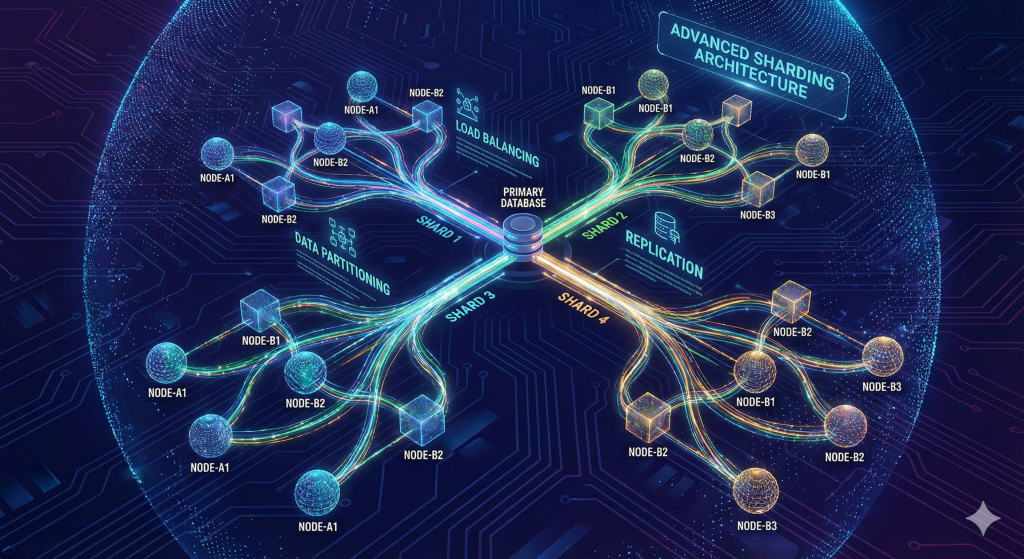

Scaling Pinecone for Large-Scale Applications

Scaling is at the heart of any successful large-scale AI application. Pinecone’s serverless architecture provides significant advantages in this area by decoupling storage from compute resources. https://blog.whoisjsonapi.com/embracing-serverless-computing-a-comprehensive-guide-to-design-architectures-and-best-practices/. This design enables the vector database to page portions of indexes from object storage on demand, thus reducing the need for permanently provisioned, always-on compute resources.

This flexibility allows developers to maintain a cost-effective and scalable solution regardless of application size. For instance, integration with cloud storage solutions such as Amazon S3 provides an efficient path to manage large volumes of data seamlessly and economically. More information on cloud storage integration can be found in the Pinecone documentation. To ensure scalability in your deployment pipelines, consider implementing Continuous Integration and Deployment strategies. https://blog.whoisjsonapi.com/implementing-continuous-integration-and-deployment-pipelines-for-microservices.

Integrating Pinecone with Existing Data Storage Solutions

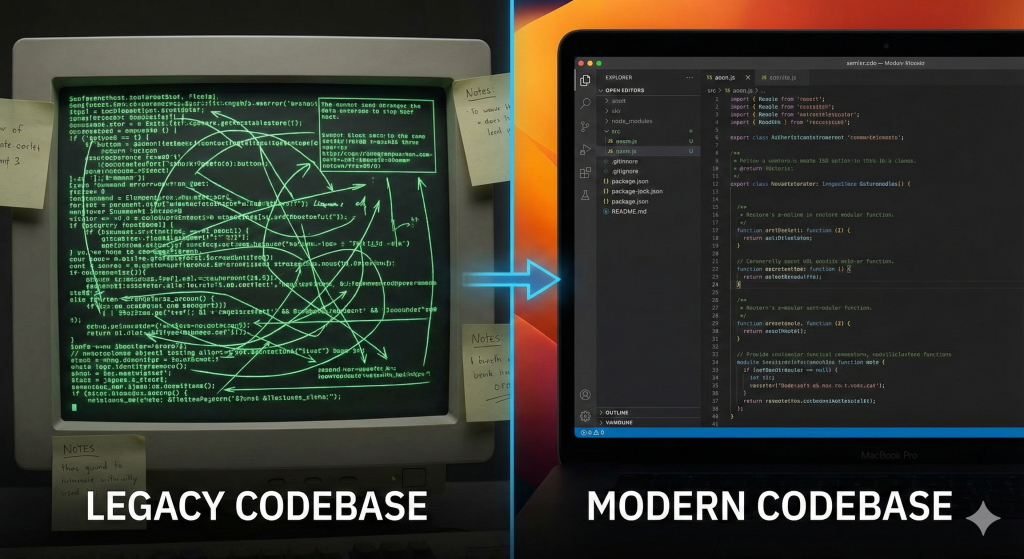

For many enterprises, the seamless integration of new technologies with legacy systems is essential. Pinecone excels in this area by providing robust integration points with existing data storage infrastructures, including cloud storage services like Amazon S3. https://blog.whoisjsonapi.com/building-ai-applications-with-python-a-step-by-step-guide-to-python-for-ai-and-how-to-make-ai-with-python. By creating a storage integration, you can effortlessly import data into your Pinecone index, paving the way for smoother, more efficient operations.

This integration not only ensures consistency across different platforms but also simplifies the migration process. As your AI workflows grow, the ability to integrate Pinecone with data lakes or warehouses will become increasingly valuable for maintaining a holistic and synchronized data environment.

Case Studies: Successful Implementations of Pinecone

Real-world implementations provide compelling evidence of Pinecone’s capabilities in managing large-scale AI challenges. Companies across diverse industries have leveraged Pinecone to optimize their search capabilities and manage vast vectorized datasets efficiently.

For example, companies that integrate real-time synchronization techniques with adaptive indexing have observed dramatic improvements in query performance and system reliability. These successes highlight not only the robustness of Pinecone but also the potential for tailored solutions that meet the specific needs of large-scale deployment.

While specific company names and case studies may be proprietary, industry publications like this comprehensive guide on Medium offer valuable insights into the best practices and implementation strategies that drive success in production environments.

Future Trends and Developments in Pinecone and AI

As AI continues to evolve, so too does the technology that underpins it. Future developments in Pinecone are likely to focus on further refinements in adaptive indexing, enhanced real-time data integration, and deeper integrations with cloud-native services. The expansion of serverless architectures and evolving data synchronization techniques will further improve performance and scalability.

Furthermore, emerging trends in generative AI and machine learning will demand even more robust vector databases, and Pinecone is poised to lead the way in these next-generation advancements. https://blog.whoisjsonapi.com/bridging-the-gap-integrating-generative-and-agentic-ai-in-software-engineering. Stay tuned as both academic and industry research will soon reveal exciting new applications and improvements within this space.

Conclusion: Unlocking New Possibilities with Optimized Pinecone

Optimizing Pinecone for large-scale AI applications is not just about tackling today’s challenges—it is about preparing for tomorrow’s opportunities. By leveraging advanced indexing strategies, efficient synchronization techniques, and scalable architectures, developers can fully harness the potential of the pinecone vector database to revolutionize AI workflows.

Whether you are integrating Pinecone with legacy systems, deploying complex real-time data pipelines, or scaling up for future AI demands, the strategies and insights discussed in this post offer a roadmap for unlocking new possibilities in your data-driven projects.

As you move forward in your AI endeavors, remember that continuous adaptation and integration of new methodologies are key to staying ahead in this rapidly evolving field.

F.A.Q.

Q: What is Pinecone?

A: Pinecone is a specialized vector database optimized for similarity search and large-scale machine learning applications. It is designed to efficiently manage and query high-dimensional data, making it ideal for AI applications.

Q: How does Pinecone handle scalability?

A: Pinecone features a log-structured indexing approach and decoupled storage and compute architecture, allowing it to scale gracefully. Its integration with cloud storage solutions and adaptive indexing techniques further ensure high performance even as data volumes grow.

Q: Can Pinecone be integrated with existing data storage solutions?

A: Yes, Pinecone supports integration with various data storage solutions, including cloud-based services like Amazon S3. https://blog.whoisjsonapi.com/building-ai-applications-with-python-a-step-by-step-guide-to-python-for-ai-and-how-to-make-ai-with-python. This enables seamless data import and synchronization, ensuring consistency across your databases.

Q: What strategies can be used to optimize Pinecone?

A: Strategies include adaptive indexing, efficient data synchronization using tools like Airbyte, real-time data updates, and fine-tuning of query performance through optimal index configurations and similarity metrics.

Conclusion

Harnessing the full potential of Pinecone in large-scale AI applications requires a deep understanding of both its capabilities and the challenges inherent in managing vast, dynamic datasets. By following the strategies discussed—from adaptive indexing and real-time data updates to seamless integration with cloud storage solutions—you can transform AI workflows and drive significant improvements in performance and efficiency. Pinecone is more than just a vector database; it is a catalyst for innovation in the field of advanced AI. Embracing these techniques not only ensures your systems remain efficient and scalable but also positions your organization at the forefront of AI technology advancements.