Introduction to Ollama Models and Their Deployment Challenges

The rapid advancements in AI and machine learning have essentially reshaped the way we deploy and manage models. Ollama models, known for their state-of-the-art performance, come with unique challenges when it comes to deployment. Issues such as resource management, scalability, and maintenance can significantly impact the execution of Ollama models in production. In this blog post, we explore an in-depth Docker strategy for deploying multiple Ollama models, addressing key challenges and providing actionable insights. With increasing demands for real-time processing and efficient resource utilization, understanding these challenges is the first step toward achieving robust deployments. For a comprehensive approach to deployment pipelines, refer to Implementing Continuous Integration and Deployment Pipelines for Microservices.

Why Choose Docker for Model Deployment?

Docker has transformed the way developers deploy applications by encapsulating services into lightweight, portable containers. There are several reasons why Docker is an ideal choice for deploying multiple Ollama models:

- Isolation: Each container runs in its own isolated environment, ensuring that multiple models do not interfere with one another. This isolation facilitates easier troubleshooting and maintenance.

- Consistency: Docker containers guarantee that the runtime environment remains consistent from development through production, reducing bugs related to environment differences.

- Scalability: Containers can be easily scaled both horizontally and vertically. This is vital for performance optimization when running resource-intensive models.

- Flexibility: By setting up an efficient Docker strategy for deploying multiple Ollama models, you can leverage GPU acceleration, network configuration, and resource limiting to fine-tune the deployment process.

Official Docker images provided by Ollama and best practices recommended by industry experts make it a compelling solution. For instance, the Ollama Docker deployment guide on DeepWiki outlines the benefits of using official images.

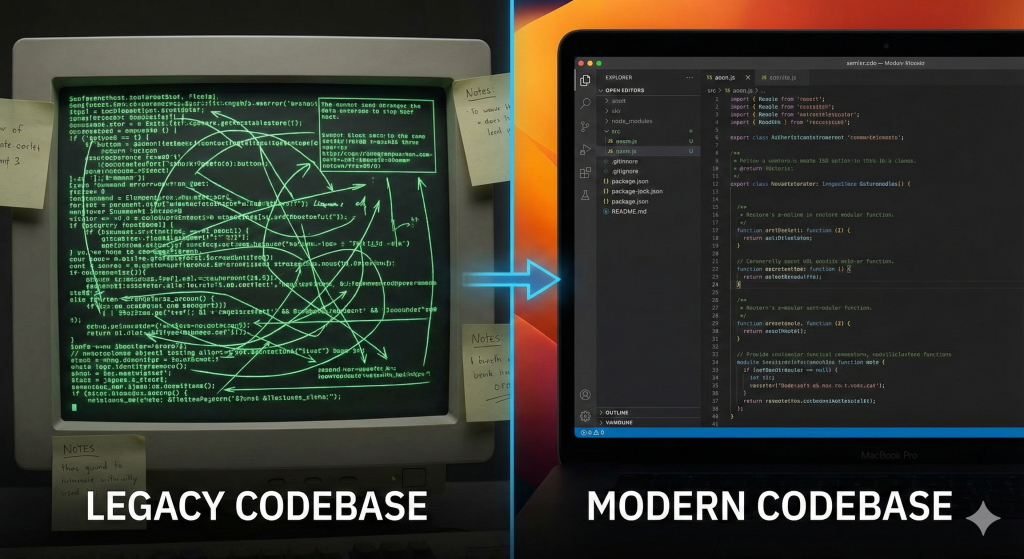

Setting Up Your Environment: Preparing Docker for Multiple Models

Creating a well-prepared environment is critical for ensuring smooth deployment. Start by installing Docker on your host machine and pulling the official Ollama images from Docker Hub. It is highly recommended to create your own Dockerfile for consistent replication of your environment.

The Dockerfile can be customized to include necessary dependencies, environment variables, and configurations. Below is a sample Dockerfile snippet that demonstrates these principles:

# Use official Ollama image as base

FROM ollama/ollama:latest

# Set environment variables

ENV MODEL_PATH=/models

# Copy custom configuration or scripts

COPY config/ /etc/ollama/

# Install additional libraries if needed

RUN apt-get update && apt-get install -y python3-pip

# Expose necessary ports

EXPOSE 8080

# Command to launch the service

CMD ["ollama", "start"]This configuration not only adheres to the best practices outlined on Medium, but also sets the stage for managing multiple models efficiently. Additionally, integrating Agentic AI into your DevOps processes can further enhance your deployment workflow, as discussed in Integrating Agentic AI into DevOps: Enhancing CI/CD Automation.

Efficient Docker Configuration for Ollama Models

When deploying multiple Ollama models, an efficient Docker configuration is essential. Several strategies can be applied to optimize your container setup:

- Use Custom Docker Networks: Leverage Docker’s user-defined bridge networks to ensure smooth communication between containers while also segregating internal and external traffic. This pattern is discussed in-depth on Arsturn’s Best Practices.

- Resource Limiting: Constrain CPU and memory usage within containers so as to prevent any single container from monopolizing the host’s resources. This is especially important with high-performance AI workloads. Docker offers flags like

--cpusand--memoryto help you manage these resources. - Volume Mounting: Persist data generated by models using Docker volumes. By mounting volumes, you ensure that data remains intact despite container restarts – a crucial step highlighted by industry experts.

This strategic configuration forms the backbone of a robust Docker strategy for deploying multiple Ollama models and ensures consistency and efficiency across environments. For securing your deployment, refer to Building Secure and Efficient MCP Servers: A Comprehensive Guide.

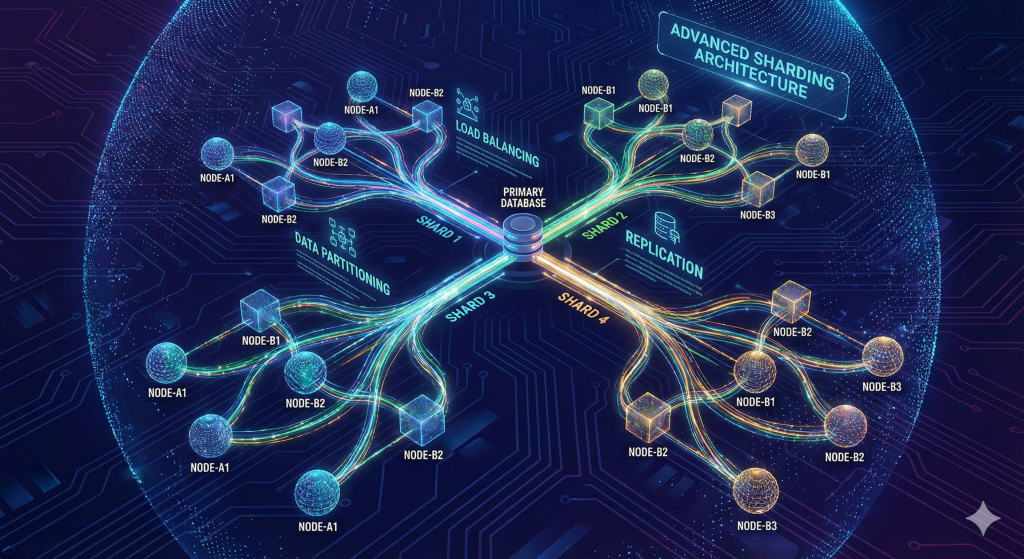

Strategies for Resource Optimization and Load Balancing

Optimizing resource usage is key to ensuring the smooth operation of multiple AI models. Below are some strategies you can adopt:

- GPU Acceleration: Many Ollama models benefit immensely from GPU acceleration. Ensure your Docker containers are set with proper parameters to engage GPU support. Techniques using tools like

nvidia-smican monitor GPU load and make adjustments in resource distribution. - Dynamic Resource Allocation: Consider employing orchestration platforms like Kubernetes with Docker to dynamically allocate resources based on demand. This aligns with suggestions from the Arsturn blog, which highlights best practices for resource management.

- Load Balancing: Implement load balancing techniques either by using Docker’s built-in network configurations or external load balancers. Distributing incoming traffic among containers helps ensure no single node is overwhelmed by requests.

These resource optimization approaches are part of a comprehensive Docker strategy for deploying multiple Ollama models, ensuring you make the best use of your hardware while maintaining high throughput and low latency. For automated scaling solutions, see Integrating Agentic AI into DevOps: Enhancing CI/CD Automation.

Ensuring Scalability and Failover Management

Scalability and failover are major concerns when running multiple, demanding applications simultaneously. Docker’s inherent scalability allows you to horizontally scale your containers as needed. In a production environment, a multi-container environment may require a robust scaling strategy:

- Automated Scaling: Use orchestration tools like Kubernetes or Docker Swarm to automatically scale up or down based on load. These tools provide mechanisms for health checks, which are essential for failover management.

- Fault Tolerance: Designing your system to restart or relocate failing containers prevents downtime. Docker’s restart policies (e.g.,

--restart always) can be incorporated into your containers to automatically recover from failures.

Developing a comprehensive scalability and failover strategy ensures continuity and responsiveness of your service, even under unexpected load spikes or partial system failures. Best practices detailed on Arsturn guide are instrumental in this phase. Additionally, incorporating secure practices such as automated API key rotation can further enhance your deployment’s resilience, as outlined in Automating API Key Rotation in Serverless Applications: A Security Essential.

Monitoring and Maintaining Performance in Production

Continuous monitoring is crucial for optimizing and managing the performance of deployed models. Docker provides several tools to assist in monitoring resource usage and container logs:

- Container Logs: Use the

docker logsfeature to track events and troubleshoot issues in real time. - Resource Metrics: Tools like Docker Stats or NVIDIA’s

nvidia-smihelp monitor CPU, memory, and GPU usage. Regular monitoring ensures that your containers are running optimally. - External Monitoring Tools: Integrate solutions like Prometheus, Grafana, or ELK Stack to visualize and analyze performance metrics over time.

Maintaining robust monitoring is an integral part of any Docker strategy for deploying multiple Ollama models. Keeping an eye on these metrics helps preempt potential failures and optimize performance continuously. For further optimization techniques, refer to Building AI Applications with Python: A Step-by-Step Guide.

Case Study: Successful Deployment of Ollama Models Using Docker

To illustrate these concepts in action, let’s look at a real-world case study where a tech company successfully deployed multiple Ollama models using Docker. The company adopted the following steps:

- Preparation: Developed a comprehensive Dockerfile and leveraged official Ollama images for a consistent development environment.

- Resource Management: Employed GPU acceleration and set strict resource limits, which allowed each model to run optimally without interference, as recommended by Arsturn.

- Scalability: Implemented an automated scaling solution using Kubernetes. Automated health checks and restart policies ensured high availability and minimal downtime.

- Monitoring: Established a logging and monitoring pipeline using Docker logs combined with Prometheus and Grafana, enabling proactive performance adjustments.

As a result, the company not only achieved seamless deployment of multiple Ollama models but also maintained a robust and scalable production environment. This case illustrates the practical applications of a well‐crafted Docker strategy for deploying multiple Ollama models.

Frequently Asked Questions (FAQ)

Q: What is the main benefit of using Docker for deploying Ollama models?

A: Docker provides an isolated, consistent, and scalable environment that simplifies the deployment process and enhances reliability. It enables efficient resource management across multiple models.

Q: How do official Docker images help in this deployment?

A: Official Docker images ensure pre-configured environments optimized for the task. They reduce the setup overhead, thereby allowing you to focus on optimizing model performance. More details can be found in the Docker Deployment guide by DeepWiki.

Q: Can I use GPU acceleration within Docker containers?

A: Yes, Docker containers can be configured to utilize GPU acceleration. This is essential for resource-intensive models and is supported by tools like nvidia-docker along with proper resource constraints as detailed on Arsturn.

Conclusion and Best Practices for Future Deployments

Deploying multiple Ollama models efficiently demands a robust and well-planned Docker strategy. In this post, we covered everything from the basic setup to advanced configuration, resource optimization, and scalability solutions. Being methodical about network configuration, resource limits, and monitoring is essential to ensure reliable production environments.

By adhering to these best practices, such as using official Docker images, configuring accurate resource limits, and leveraging orchestration tools for scalability, you set up a foundation that not only meets current demand but is also primed for future expansion. The insights provided by experts at Arsturn and Medium reinforce the importance of rigorous planning and execution.

In summary, a well-executed Docker strategy for deploying multiple Ollama models can not only streamline your workflows but also provide resilience and scalability in production environments. Embrace these practices to drive success in your AI-driven projects and stay ahead in the fast-evolving landscape of machine learning deployment.