Understanding Serverless Computing: An Introduction

Serverless computing has emerged as a groundbreaking paradigm shift in application development and deployment. It abstracts the complexity of managing the underlying infrastructure, allowing developers and businesses to focus solely on writing code and delivering value. In this model, the cloud provider handles all aspects of server management, from provisioning to scaling, thereby freeing up resources and accelerating innovation.

For IT professionals and developers, serverless means a reduced time-to-market, enhanced agility, and an infrastructure that dynamically scales according to workload. This guide dives deep into how serverless computing works, its benefits, and the best practices for designing efficient serverless applications.

Key Benefits of Going Serverless

One of the primary attractions of serverless computing is the range of benefits it brings to modern application development. Here are some significant advantages:

Cost Efficiency

The pay-as-you-go model ensures that you only incur costs for the resources your application consumes. For instance, research from Capaciteam highlights that clients deploying on Google Cloud Serverless achieve up to a 75% reduction in infrastructure costs while deploying applications 95% faster.

Automated Scalability

Serverless architectures automatically allocate resources based on demand, meaning that your application can effortlessly handle traffic surges without manual intervention. This dynamic scalability eliminates the need to over-provision resources during high-traffic periods and helps reduce bills during off-peak periods.

Increased Agility

By removing the overhead of managing servers, developers can focus on deploying and iterating on their code. According to research, companies like Capaciteam have seen a 3x increase in development velocity after transitioning to serverless architectures. This accelerated pace of innovation is invaluable in today’s fast-paced tech landscape.

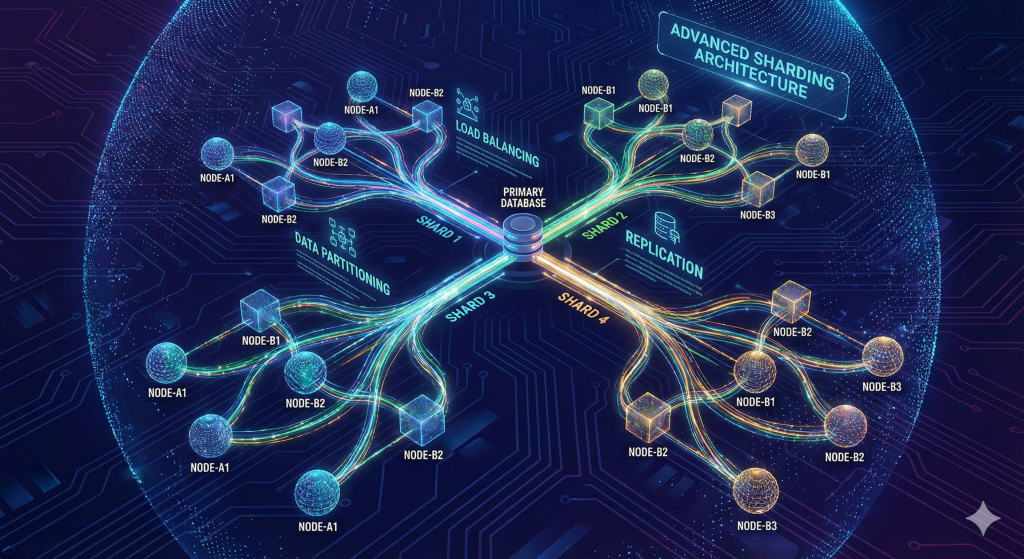

Core Components of Serverless Architecture

A robust serverless architecture is built on several key components that work in harmony to deliver a seamless experience. Here are the fundamental building blocks:

Functions as a Service (FaaS)

FaaS is the most recognizable element of serverless computing. It allows developers to deploy individual functions that automatically run in response to events. This model encapsulates business logic in small, stateless, and independently scalable units.

Backend as a Service (BaaS)

Beyond functions, serverless platforms offer integrated services such as databases, authentication, and storage. These services offload the responsibility for maintaining the underlying system, further streamlining deployment and scalability.

Event-Driven Architecture

Serverless computing heavily relies on event-driven models, where actions trigger the execution of code. This could be a file upload, a database update, or a new user registration. The decoupled nature of these events results in a flexible and resilient application architecture.

Designing Efficient Serverless Applications

Design is a critical component in ensuring that serverless applications perform optimally. An efficient design aligns with both business needs and technical requirements.

Adopt Modularity

Following the Single Responsibility Principle (SRP) by breaking down applications into small, independent functions ensures easier maintenance and scalability. For example, in an e-commerce scenario, segregate functions for tasks such as inventory, order processing, and payment handling.

Implement Robust Error Handling

Since serverless functions operate in ephemeral environments, it is essential to incorporate strong error handling and logging. This strategy not only helps to capture and resolve potential exceptions but also integrates retry mechanisms and circuit breakers to prevent cascading failures. Guidance on these practices can be found in insights from PCG.

Optimize Function Execution

Billing for serverless services is based on execution time, making it critical to optimize your code for speed and efficiency. Choosing the right memory and CPU configurations, along with efficient coding practices, will reduce runtime costs significantly. For more detailed strategies, see recommendations from Trigyn.

Common Challenges in Serverless Computing and How to Overcome Them

While serverless computing offers many benefits, it’s important to be aware of potential challenges and strategies to mitigate them.

Cold Start Latency

Cold starts can cause delays as functions spin up after periods of inactivity. This is particularly critical for performance-sensitive applications like real-time communications. Cloud providers are addressing this through techniques such as pre-warming containers or provisioned concurrency. GeeksforGeeks offers insights into how AWS Lambda and others are mitigating these delays.

Vendor Lock-In

Deep integration with specific cloud providers can create dependency issues, making it difficult to switch platforms. To counteract this, consider using open-source serverless frameworks like Kubeless, OpenFaaS, and Knative which facilitate cross-platform portability.

Monitoring and Debugging Complexities

The distributed and event-driven nature of serverless functions presents challenges in tracking performance and debugging issues. Utilizing advanced monitoring tools such as AWS X-Ray, Azure Monitor, and Google Cloud Trace can help developers maintain observability over their applications.

Best Practices for Serverless Application Development

Integrating best practices into your serverless development strategy can significantly enhance performance, security, and maintainability.

Adhere to the Separation of Concerns

Ensuring that each function is focused on a single task not only simplifies debugging but also improves scalability. This approach is well-documented in best practice guides from CIO.

Implement Robust Error Handling and Logging

Design your serverless functions with strong error detection and recovery in mind. Logging meaningful error messages and incorporating retry logic is essential to maintain a robust system architecture.

Optimize Performance

Pay close attention to the function execution time and resource utilization. Efficient handling of these components can reduce costs and improve the overall performance of your serverless applications.

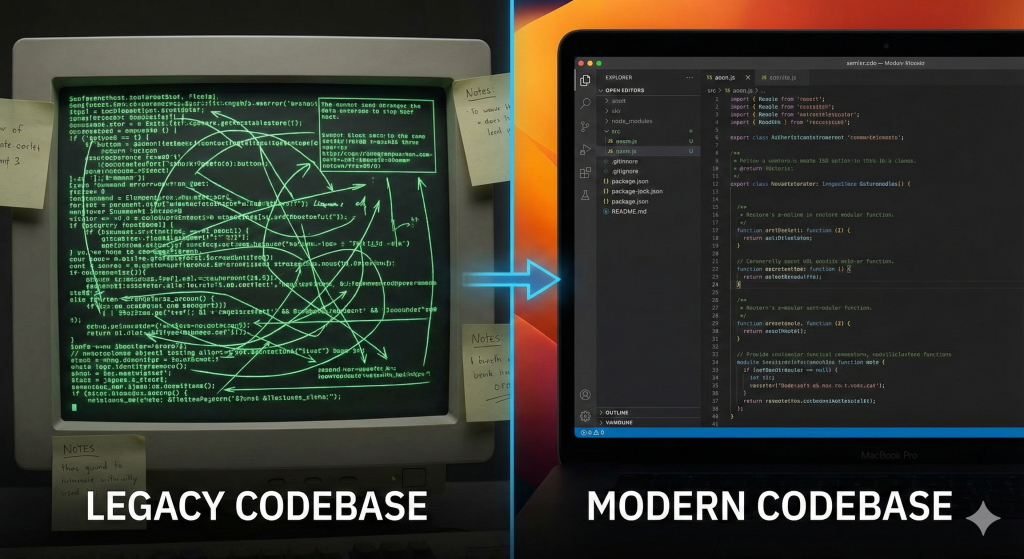

Latest Advancements and Trends in Serverless Technologies

The serverless landscape continues to evolve with ongoing innovations and trends that enhance performance and simplify the development process.

Edge Computing Integration

As edge computing becomes more mainstream, serverless architectures are expanding closer to the end-user. This improves latency and performance, especially for applications requiring real-time processing.

Increased Support for Containers

Modern serverless platforms are now providing native support for containerized applications. This convergence allows developers to leverage the benefits of containers—like portability and consistency—alongside the scalability of serverless.

Enhanced Security Measures

With security as a top priority, cloud providers are continuously enhancing their serverless frameworks to include automated threat detection, secure identity management, and streamlined compliance tools.

Real-Life Case Studies: Success Stories with Serverless

Several organizations have successfully integrated serverless computing to transform their business operations. By leveraging the agility and scalability of serverless architecture, companies have optimized their applications while significantly reducing costs and improving customer experiences.

E-Commerce Transformation

One leading e-commerce platform segmented their operations into discrete serverless functions for order processing, inventory management, and payment processing. This modular approach not only enabled seamless scaling during peak shopping seasons but also enhanced system reliability and performance.

Financial Services Innovation

A major financial institution adopted serverless solutions to streamline their transaction processing systems. With the implementation of focused functions and advanced monitoring tools, they improved real-time decision-making and reduced processing times during peak loads.

Future of Serverless Computing: Predictions and Innovations

Looking ahead, serverless computing is poised to further disrupt traditional infrastructure paradigms. Several predictions have emerged from industry experts:

Greater Adoption Across Industries

The flexibility and cost savings associated with serverless architectures will continue to attract a wider range of sectors—from fintech and healthcare to IoT and gaming.

Innovative Development Tools

We can expect to see an emergence of more sophisticated development platforms, debugging tools, and monitoring solutions tailored specifically for serverless deployments. These tools will help in automating and optimizing every stage of the development lifecycle.

Reduced Cold Start Impact

Continued innovations in provider technologies and strategies like provisioning concurrency will further reduce cold start latencies, making serverless a viable solution even for the most latency-sensitive applications. For further insights, GeeksforGeeks outlines some of the top trends shaping the future of this domain.

FAQ

Q1: What exactly is serverless computing?

A: Serverless computing abstracts the server management away from developers, allowing applications to run without provisioning or managing servers. The underlying infrastructure is managed by the cloud provider, enabling automatic scaling and a pay-as-you-go pricing model.

Q2: How does serverless computing improve cost efficiency?

A: Serverless platforms charge based on usage, meaning you pay only for the compute time your functions run. This pay-per-use model helps optimize costs, especially for applications with variable workloads.

Q3: What are the most common challenges faced in serverless deployments?

A: Common challenges include cold start latency, vendor lock-in, and difficulties in monitoring and debugging distributed, event-driven functions. However, solutions and best practices exist to successfully mitigate these challenges.

Q4: Can serverless computing handle large-scale applications?

A: Absolutely. Serverless architectures are designed to scale automatically. They are capable of handling significant loads by dynamically allocating resources based on demand.

Conclusion: Embracing Serverless for Future-Ready Solutions

Serverless computing is not just a fleeting trend—it is a transformative approach that redefines how applications are built, deployed, and scaled. By eliminating the overhead of server management, organizations can focus on innovation and rapid development, unlocking unprecedented levels of agility and cost savings.

Whether you are a seasoned developer, an IT professional, or a business leader looking to optimize operations, embracing serverless technology is a step toward future-proofing your digital infrastructure. With continuous advancements and a growing ecosystem of tools and best practices, serverless computing is paving the way for more efficient, resilient, and scalable applications in the years to come.

By leveraging the insights and strategies discussed in this guide, you can confidently move towards a serverless future and enjoy the benefits of fast deployment, optimized costs, and robust performance across your applications.