Introduction to Generative AI in Software Testing

The evolution of software testing has reached a new milestone with the introduction of generative AI. In this guide, we explore how tools like GPT-5-Codex are revolutionizing the process by automating test case generation, enhancing code quality, and streamlining end-to-end testing. By leveraging these cutting-edge capabilities, developers and QA engineers can dramatically improve bug detection and boost software reliability. With keywords such as Generative AI for Testing: Using GPT to Generate Tests and Find Bugs woven into the strategy, the integration of AI into your testing workflows becomes not only innovative but essential for competitive advantage. For deeper insights into AI capabilities, refer to our post on Vibe Coding: Revolutionizing Software Development with AI Assistance.

The Advantages of AI in Automated Test Case Generation

One of the most exciting applications of generative AI in testing is its ability to automate test case creation. AI tools, exemplified by platforms like DevAssure and TestSprite, can generate test cases from inputs such as UI mockups, feature specifications, and design artifacts. This automation has proven to achieve up to 90% reliability and 85% test coverage in under 10 minutes. For instance, DevAssure successfully demonstrates how generative AI can reduce manual effort while ensuring robust testing scenarios. Similarly, TestSprite’s AI approach has boosted test pass rates dramatically, as noted in their case studies (TestSprite AI Test Case Generation via AI agent). If you’re interested in CI/CD automation surrounding microservices, please check our post on Implementing Continuous Integration and Deployment Pipelines for Microservices.

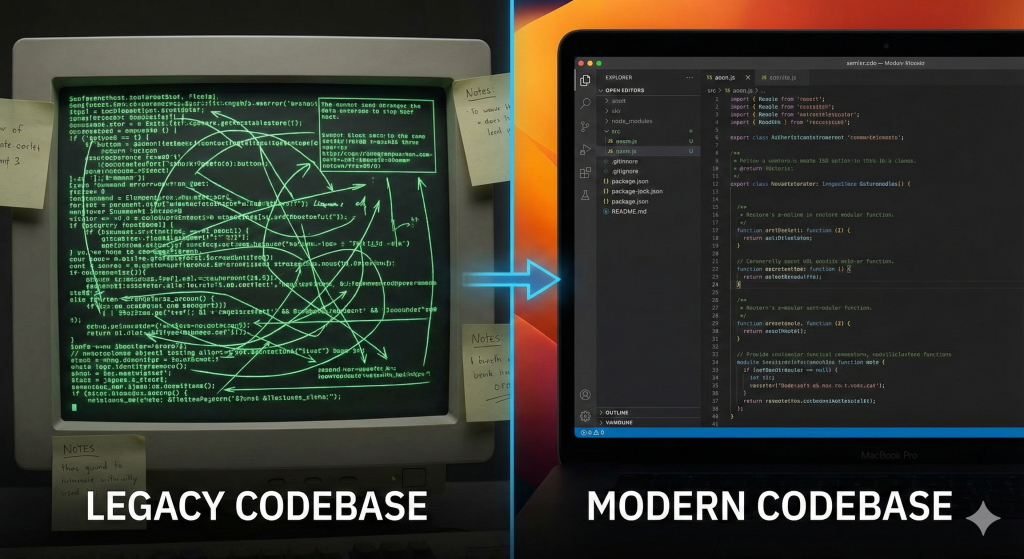

How GPT-5-Codex Improves Code Quality

GPT-5-Codex is at the forefront of integrating AI with software testing, offering significant improvements in code quality. AI-driven tools like Qodo (formerly Codium) bring context-aware code reviews directly into developers’ IDEs, enabling early detection of issues and improved upkeep of code standards. Such integrations have been credited with reducing bug introduction at early stages in the development lifecycle. The AI reviews not only refine coding practices but also serve as a learning tool for less experienced team members, ensuring that every piece of code adheres to high-quality standards. For more detailed insights, visit the Qodo reference. Additionally, for a comprehensive look at building robust APIs—which closely relates to maintaining code quality—see Building Robust GraphQL APIs in Go.

Streamlining End-to-End Testing with AI

End-to-end (E2E) testing is one of the most complex parts of software validation, often requiring extensive manual effort to validate intricate user workflows. Leveraging generative AI, such as the GenIA-E2ETest approach, streamlines this process by automatically generating executable test scripts from natural language descriptions. This method has achieved an average precision of 82% and a recall of 85% (GenIA-E2ETest Study). The transformation of natural language requirements into executable code not only speeds up the testing cycle but also minimizes manual adjustments. For additional effective strategies in serverless environments, our post on Automating API Key Rotation in Serverless Applications might be beneficial.

For example, a sample code snippet that integrates AI-generated test cases might look like this:

// Pseudocode for integrating GPT-5-Codex in test case generation

const generateTestCases = async (featureDescription) => {

const response = await gpt5codex.generate({

prompt: `Generate test cases for: ${featureDescription}`,

max_tokens: 500

});

return response.testCases;

};

// Usage

const testCases = await generateTestCases('User authentication and session management');

executeTestCases(testCases);Integrating AI Tools into Your Existing Testing Workflow

Adopting AI in your software testing process involves more than just the installation of a new tool; it requires thoughtful integration into existing workflows. Here are several essential steps to consider:

- Select Appropriate AI Tools: Research and choose AI-driven testing solutions that align with your project’s requirements and integrate seamlessly with your current development environment.

- Train AI Models with Relevant Data: The reliability of AI-generated outcomes depends on the quality of the training data. Ensure that the AI models are trained on data that is highly relevant to your application domain.

- Maintain Human Oversight: Despite the advanced capabilities of generative AI, human oversight is indispensable for quality assurance. Regular reviews of AI-generated test cases help maintain high standards and address any anomalies.

- Continuously Monitor and Update: The dynamic nature of software requires continuous monitoring of AI tools’ performance, with regular updates to adapt to new features and requirements.

Case Studies: Success Stories from Industry Leaders

Several industry giants have already harnessed the power of generative AI to improve their software testing processes. For instance, companies using DevAssure have reported rapid test case generation, which accelerated their development cycles without sacrificing coverage or reliability. Similarly, organizations implementing GenIA-E2ETest have seen significant efficiency improvements due to the automated conversion of user stories into functional tests. These case studies provide compelling evidence of how AI, particularly tools based on GPT-5-Codex, transforms both the speed and accuracy of test processes. For further exploration, check out our guide on Harnessing the Full Potential of Pinecone in Large-Scale AI Applications.

Potential Challenges and How to Overcome Them

While the integration of generative AI into software testing brings numerous benefits, it also presents challenges. Some of the common obstacles include:

- Data Quality and Relevance: AI models are only as effective as the data they learn from. Ensuring that your test data is accurate, up-to-date, and contextually relevant is crucial.

- Integration Complexity: Merging AI tools with existing testing frameworks can be challenging, requiring significant planning and expertise.

- Human Oversight: While AI significantly reduces manual efforts, it is not infallible. A hybrid approach, where human oversight complements AI capabilities, is essential to validate the generated outcomes.

- Scalability Concerns: The performance of AI tools may vary with the scale of operations, calling for continuous performance monitoring and system optimization.

To overcome these challenges, organizations need to invest in training programs, adopt iterative integration strategies, and consistently monitor AI performance metrics. For additional strategies on optimizing deployment processes, our post on Optimizing Docker Strategies for Deploying Multiple Ollama Models could offer useful insights.

Future Trends: What’s Next for AI in Software Testing

The landscape of software testing is poised for further transformation as AI continues to evolve. Future trends may include:

- More Context-Aware Models: AI models will become even more adept at understanding specific domain requirements, resulting in more precise and relevant test cases.

- Seamless CI/CD Integration: Enhanced integration with continuous integration and delivery pipelines will allow instantaneous testing feedback, reducing the feedback loop time and accelerating software releases.

- Enhanced Collaboration Tools: Future AI tools could offer real-time suggestions and collaborative features, making it easier for teams to review and refine test cases collectively.

- Robust Security Testing: As security becomes increasingly critical, AI-driven tools will evolve to address vulnerabilities with improved accuracy.

Conclusion: Making AI a Part of Your Testing Strategy

Incorporating generative AI into your software testing strategy is no longer a futuristic concept—it is a practical necessity. Tools like GPT-5-Codex provide tangible benefits, from automated test generation to enhanced code quality and streamlined E2E testing. By following best practices in integration and maintaining a balanced approach with human oversight, teams can achieve remarkable improvements in bug detection and overall software quality. This guide emphasizes the importance of embracing these technologies, ensuring that your testing suite is prepared for the future.

Frequently Asked Questions (FAQ)

What is Generative AI for Testing?

Generative AI for Testing refers to the use of AI algorithms, especially generative models like GPT-5-Codex, to automatically generate test cases, assist in code reviews, and optimize various phases of software testing. It represents a shift from manual testing to a more automated, efficient approach that improves overall software quality.

How does GPT-5-Codex help improve code quality?

GPT-5-Codex, integrated into tools such as Qodo, provides automated, context-aware code reviews within development environments. This early detection of potential issues helps reduce bug introduction and enhances overall code quality.

Can AI fully replace human testers?

While AI significantly automates and streamlines many aspects of testing, human oversight remains essential. AI-generated test cases may still require human validation to ensure they meet quality and contextual standards.

What challenges might I face when integrating AI into my testing workflow?

Challenges include ensuring data quality, addressing integration complexities, maintaining necessary human oversight, and scaling AI solutions effectively. Each of these can be managed with careful planning and continuous monitoring of AI performance.

Where can I see AI in action for test case generation?

You can watch a visual demonstration of AI-driven test case generation in this video: Generate Your Test Cases With Ease.

Final Thoughts

The integration of generative AI into software testing is paving the way for a new era of efficiency and reliability in software development. With advanced models like GPT-5-Codex leading the charge, test case generation, code quality checks, and end-to-end testing are becoming faster and more accurate. By adopting these innovative AI tools and strategies, developers and QA engineers can future-proof their testing processes and deliver higher-quality software products. Embrace the journey into AI-enhanced software testing and transform your testing strategy today.