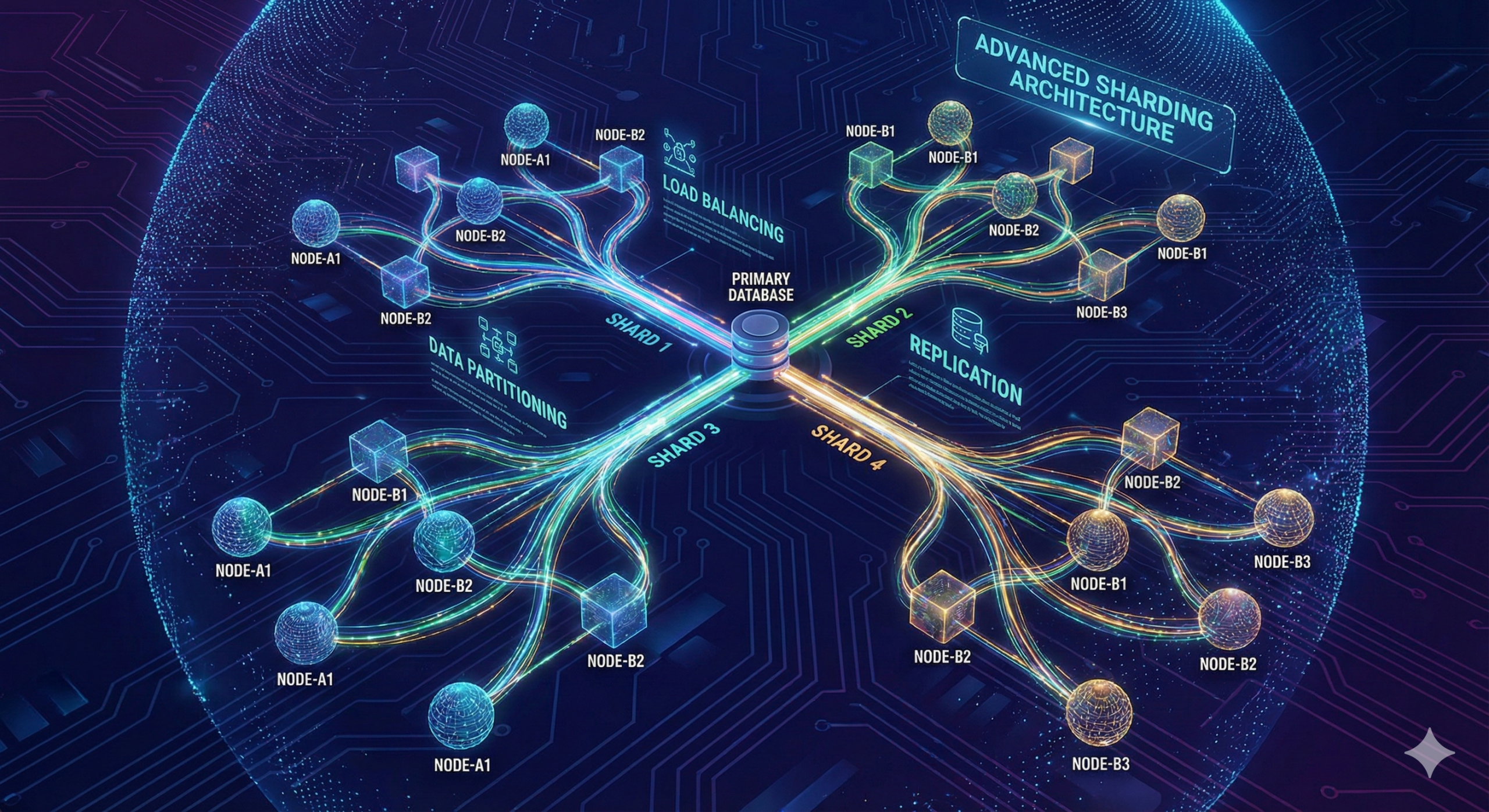

Introduction to Database Sharding

In the era of massive, data-driven applications, ensuring that databases can scale seamlessly is crucial to maintaining performance and reliability. Database sharding stands out as a pivotal technique, allowing developers and administrators to partition data across multiple servers. This approach, known as database sharding patterns: partitioning strategies for massive scale, enables organizations to tackle the complexities of Big Data, supporting millions of users and petabytes of information. In this comprehensive guide, we’ll delve into advanced sharding methodologies—such as consistent hashing, dynamic partitioning, and composite sharding—and explore how they help overcome challenges in data distribution, query performance, and horizontal scalability.

The Need for Advanced Sharding Techniques

The traditional approach to database scaling—vertical scaling—often hits physical and economic limits. Modern enterprises face unpredictable workloads, globally distributed users, and growing data volumes. In such environments, simple sharding by range or hash can lead to hotspots, bottlenecks, or difficult migrations. Advanced sharding techniques address these pain points by offering more intelligent data distribution, resilience against workload shifts, and adaptability as the application evolves. Mastering these techniques is foundational to building resilient architectures that can support applications at internet-scale. Learn about CI/CD for scalable architectures.

Understanding Consistent Hashing

Consistent hashing is an advanced sharding pattern that maps data to a continuum (often visualized as a ring), assigning ranges of the hash space to different shards or nodes. One of its major advantages is minimized data movement when scaling: adding or removing nodes only requires remapping a small portion of the total dataset, not the entire data landscape. This reduces downtime and risk during cluster changes.

For example, imagine hashing user IDs and distributing them among server nodes. If you add a new node, only a fraction of the hash space—and by extension, user data—needs to be reassigned. This drastically lowers the complexity of scaling horizontally and improves fault tolerance. The uniform distribution also helps in avoiding hotspots, ensuring no single server becomes overloaded.

Learn more: How to Combine Sharding and Consistent Hashing within a Distributed System? (GeeksforGeeks)

Consistent Hashing Pseudocode Example

// Pseudocode for assigning item to shard using consistent hashing

function getShard(itemKey, shards):

hash = hashFunction(itemKey)

shardIndex = findClosestShard(hash, shards)

return shards[shardIndex]Implementing Dynamic Partitioning

Dynamic partitioning yields a flexible sharding model that automatically adapts to changing data distributions and workloads. When a particular shard grows beyond a defined threshold, dynamic partitioning algorithms can split the shard into smaller, more manageable units or redistribute data to less utilized shards. This approach reduces the risk of performance degradation due to skewed datasets (commonly known as ‘hotspots’).

Learn more about advanced deployment strategies: Optimizing Docker for Scale.

Adaptive shard management and hybrid consensus mechanisms are often employed to coordinate these operations with minimal manual intervention, maintaining high system availability and ensuring data security.

Find out more about dynamic partitioning at: DynaShard: Secure and Adaptive Blockchain Sharding Protocol with Hybrid Consensus and Dynamic Shard Management (arxiv.org)

Dynamic Partitioning Example in SQL

For instance, in a distributed SQL database, dynamic partitioning might look like:

-- Pseudocode for splitting a hot shard

IF (SELECT COUNT(*) FROM users WHERE shard_id = 7) > THRESHOLD THEN

-- Split shard 7 into shards 7A and 7B

UPDATE users SET shard_id = '7A' WHERE condition_A;

UPDATE users SET shard_id = '7B' WHERE condition_B;

END IF;Exploring Composite Sharding

Composite sharding is a sophisticated database sharding pattern that combines two or more sharding strategies to address complex distribution needs, such as geography, service class, or multi-tenancy. For example, data may first be sharded by region (range or list sharding), and within each region, further sharded with consistent hashing. This hybrid approach enables organizations to optimize for both high-level organizational needs and fine-grained load distribution.

This strategy is invaluable in scenarios where a single sharding key cannot provide an even or logically-sound data split. By carefully designing composite sharding strategies, developers can achieve balanced distribution, reduced risk of hotspots, and support cross-domain business requirements.

Official documentation reference: Sharding Methods (Oracle Docs)

Composite Sharding Example

// Example: First by region, then by user ID hash

function getCompositeShard(region, userId, regionShards):

regionShardList = regionShards[region]

userShard = consistentHash(userId, regionShardList)

return userShardComparative Analysis: When to Use Each Technique

Each advanced database sharding pattern offers unique strengths:

- Consistent Hashing: Ideal when adding or removing nodes frequently; minimizes data movement and avoids hotspots.

- Dynamic Partitioning: Best suited for environments with unpredictable or rapidly shifting workloads, as it automatically adapts to imbalances.

- Composite Sharding: Preferred for complex organizational data models where single-attribute sharding is insufficient.

Choosing the right strategy depends on your application’s data growth pattern, access profile, and operational demands. Some high-scale applications may integrate multiple techniques to balance consistency, performance, and operational simplicity.

Addressing Query Performance Challenges

While advanced sharding enables scaling, it can introduce challenges—especially for cross-shard queries and distributed transactions. Queries spanning multiple shards may become inefficient, leading to increased latency and resource consumption. To mitigate this:

Learn how to integrate fuzzing into your CI pipelines for better testing: Fuzzing in CI/CD.

- Implement parallel query execution to simultaneously query all relevant shards and aggregate results.

- Design schemas to minimize the need for cross-shard joins by denormalizing data or introducing reference tables where appropriate.

- Consider application-level query routing to direct specific requests to the correct shard based on known keys.

Additionally, maintaining ACID properties across shards is a significant technical hurdle. Protocols like the Two-Phase Commit or compensating transactions are often required, though these introduce complexity and potential performance trade-offs.

Deep dive: Database Sharding and Its Challenges (Java Code Geeks)

Enhancing System Scalability through Sharding

Advanced sharding techniques directly and profoundly enhance an application’s ability to scale. By decoupling storage and compute across multiple servers, sharded databases can accommodate rapid data growth and sudden surges in workload. Efficient shard management ensures individual node failures or regional outages have minimal impact on service continuity. Moreover, techniques like consistent hashing and dynamic partitioning enable near-seamless scale-out and scale-in operations, critical for cloud-native architectures and elastic resource management.

Adaptation to workload shifts, avoidance of hotspots, and simplified scaling are key outputs of well-implemented sharding strategies. This makes advanced sharding pivotal for any platform aspiring to serve global user bases or real-time analytics.

For additional reading: Database Sharding Explained for Scalable Systems (Aerospike)

Case Studies: Successful Implementations

Let’s look at how some leading platforms have leveraged advanced database sharding patterns:

- Social Media Networks: Large networks often shard data first by geography or market segment, and then by user ID or content type. Composite sharding ensures user data remains physically close to its primary consumers, while consistent hashing inside each region maintains balance as the user base shifts.

- E-commerce Giants: These platforms dynamically partition shards as product catalogs or user traffic grows in certain regions, facilitating rapid adaptation to campaigns and seasonal spikes.

- Blockchain Systems: Leveraging dynamic partitioning with hybrid consensus protocols, next-generation blockchain platforms balance security, adaptability, and high-throughput transaction processing, as highlighted in recent research (DynaShard).

Across these industries, the right blend of sharding techniques supports uninterrupted performance under heavy load and ever-changing operational environments.

Future Trends in Database Sharding

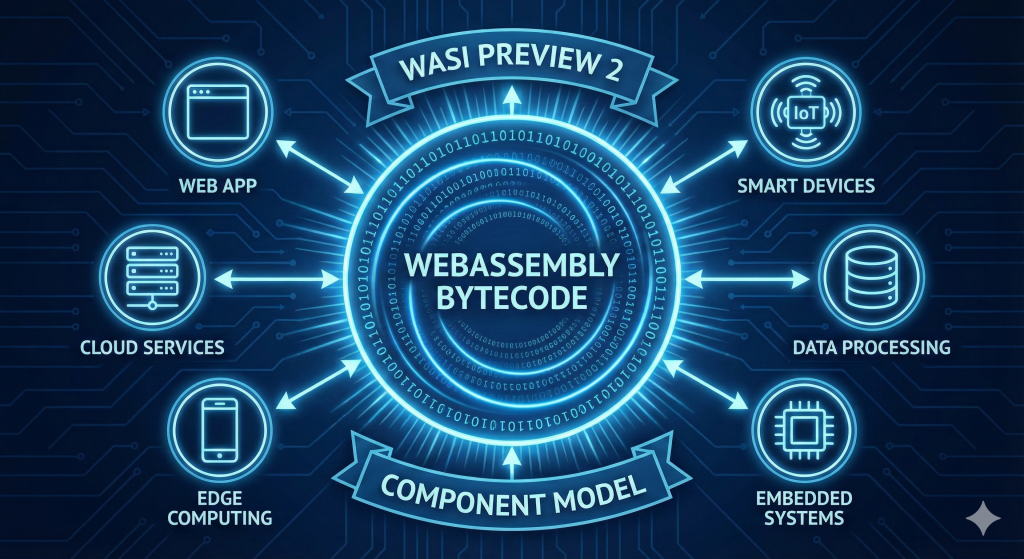

The future of database sharding patterns: partitioning strategies for massive scale is being shaped by automation, AI-driven workload prediction, and multi-layered sharding regimes. Emerging trends include:

- AI-Driven Shard Management: Machine learning models predict workload shifts, proactively reshard data, and balance cluster loads in real time.

- Serverless and Cloud-Native Databases: Sharding strategies are being tightly integrated with container registries and orchestration for elastic scaling in cloud environments.

- Composable Sharding Frameworks: Modularity allows organizations to mix-and-match sharding strategies as operational requirements evolve.

- Enhanced Cross-Shard Query Optimization: Future DBMS releases will natively support more efficient cross-shard joins and global secondary indexes.

These innovations will reduce the manual burden on database administrators and enable “set it and forget it” scalability for even the largest applications.

Conclusion: Choosing the Right Sharding Strategy for Your Needs

Mastering advanced database sharding techniques is essential to building and maintaining high-performing, scalable, and resilient data architectures at massive scale. Whether you select consistent hashing, dynamic partitioning, composite sharding, or a custom blend, the critical factors to evaluate include anticipated workload patterns, geographic distribution, data mutability, and operational complexity.

By understanding the strengths and trade-offs of each advanced strategy, and by leveraging proven frameworks and community best practices, developers and DBAs can ensure their applications thrive, no matter how rapidly their data grows. Start with your application’s unique needs, iterate with real-world testing, and adopt dynamic, composite, or hash-based sharding patterns as your system evolves.

Frequently Asked Questions (F.A.Q)

What is the biggest challenge with database sharding?

The primary difficulties stem from managing distributed transactions, ensuring data consistency, and optimizing cross-shard queries. Hotspot avoidance and seamless data migration are also key challenges.

Can I combine multiple sharding strategies?

Absolutely. Composite sharding, which combines range, list, and hash-based slicing, is recommended for complex applications dealing with diverse data access patterns.

Is sharding always the best choice for scaling databases?

No. Sharding adds complexity and management overhead. It is usually considered when vertical scaling becomes impractical or prohibitively expensive, and when the application’s workload and data volumes demand it.

How do I prevent hotspots in a sharded database?

Using advanced patterns like consistent hashing and dynamic partitioning can help evenly distribute data and adapt to shifting workloads, thus mitigating hotspots.

Where can I learn more?

Explore detailed articles and documentation at: